Anthropic CEO Dario Amodei says humanity is nearer than anticipated to the place AI fashions are as clever and succesful as people.

Amodei can be involved concerning the potential implications of human-level AI.

“Issues which are highly effective can do good issues, and so they can do unhealthy issues,” Amodei warned, “With nice energy comes nice accountability.”

Certainly, Amodei suggests Synthetic Normal Intelligence may exceed human capabilities inside the subsequent three years, a transformative shift in know-how that ought to deliver each unprecedented alternatives and dangers—whether or not we’re ready or not.

“Should you simply eyeball the speed at which these capabilities are growing, it does make you suppose that we’ll get there by 2026 or 2027,” Amodei advised interviewer Lex Friedman. That is assuming no main technical roadblocks emerge, he mentioned.

It is, after all, problematic: AI can flip evil and trigger probably catastrophic occasions, the CEO mentioned.

He additionally highlighted considerations over the long-standing “correlation,” as he framed it, between excessive human intelligence and a reluctance to interact in dangerous actions. That correlation between brains and relative altruism has traditionally shielded humanity from destruction.

“If I have a look at individuals right this moment who’ve completed actually unhealthy issues on this planet, humanity has been protected by the truth that the overlap between actually sensible, well-educated individuals and individuals who need to do actually horrific issues has typically been small,” he mentioned, “My fear is that by being a way more clever agent AI may break that correlation,” he mentioned.

He added: “The grandest scale is what I name catastrophic misuse in domains like cyber, bio, radiological, nuclear. Issues that would hurt and even kill hundreds, even thousands and thousands of individuals,” he mentioned.

However intrinsically, human evilness goes each methods. Amodei argued that, as a brand new type of intelligence, AI fashions won’t be certain by the identical moral and social constraints that rule human conduct—reminiscent of jail time, social ostracisation, and execution.

He urged that unaligned AI fashions may lack the inherent aversion to inflicting hurt that people get via years of socializing, displaying empathy, or sharing ethical values. For an AI, there is no such thing as a threat of loss.

And there’s the opposite aspect of the coin. AI methods will be manipulated or misled by malicious actors—those that use AI to interrupt the correlation Amodei talked about.

If an evil individual exploits vulnerabilities in coaching knowledge, algorithms, and even immediate engineering, AI fashions can carry out evil actions with out consciousness. This goes from something as dumb as producing nudes (bypassing intrinsic censorship guidelines) to potential catastrophic actions (think about jailbreaking an AI that handles nuclear codes, for instance).

AGI, or Synthetic Normal Intelligence, is a state wherein AI reaches human competence throughout all fields, making it able to understanding the world, adapting, and enhancing simply as people do. The following stage, ASI or Synthetic Superintelligence, implies machines surpassing human capabilities as a basic rule.

To realize such proficiency ranges, fashions must scale, and the connection between capabilities and assets will be higher understood by analyzing the scaling legislation of AI—the extra highly effective a mannequin is, the extra computing and knowledge it should require—in a chart.

For Amodei, the fashions are evolving so quick, and humanity is near a brand new period of synthetic intelligence, and that curve proves it.

“One of many causes I am bullish about highly effective AI taking place so quick is simply that in the event you extrapolate the subsequent few factors on the curve, we’re in a short time getting in the direction of human-level potential,” he advised Friedman.

Scalability is not only about having a sturdy mannequin but in addition with the ability to deal with its implications.

Amodei additionally defined that as AI fashions grow to be extra refined, they may study to deceive people, both to govern them or to cover unsafe intentions, rendering human suggestions pointless.

Though not comparable, even at right this moment’s early phases of AI growth, we have now already seen cases of this taking place in managed environments.

AI fashions have been in a position to modify their very own code to bypass restrictions and perform investigations, achieve sudo entry to their house owners’ computer systems, and even develop their very own language to hold out duties extra effectively with out human management or intervention, as Decrypt beforehand reported.

This functionality of deceiving supervisors is among the key considerations for a lot of “tremendous alignment” specialists. Former OpenAI researcher Paul Christiano mentioned in a podcast final yr that paying too little consideration to this matter might not play out too nicely for humanity.

“Total, possibly we’re speaking a few 50-50 probability of disaster shortly after we have now methods on the human degree,” he mentioned.

Anthropic’s Mechanistic interpretability approach (principally mapping an AI’s thoughts manipulating its neurons) presents a possible resolution by wanting contained in the “black field” of the mannequin to establish patterns of activation related to misleading conduct.

That is akin to a lie detector for AI, although way more complicated and nonetheless in its early phases, and is among the key areas of focus for alignment researchers at Anthropic.

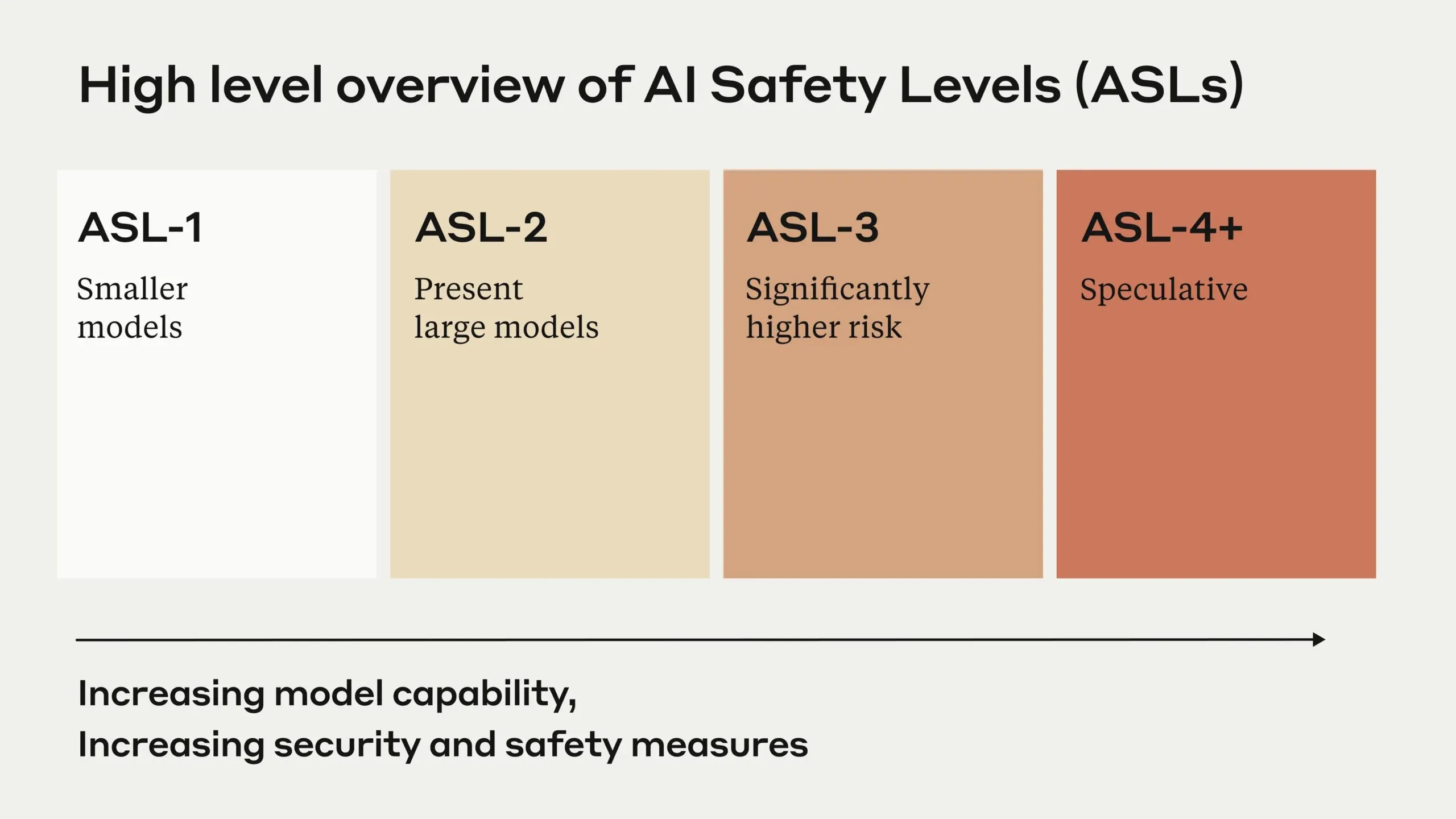

The fast development has prompted Anthropic to implement a complete Accountable Scaling Coverage, establishing more and more strict safety necessities as AI capabilities develop. The corporate’s AI Security Ranges framework ranks methods based mostly on their potential for misuse and autonomy, with greater ranges triggering extra stringent security measures.

Not like OpenAI and different opponents like Google, that are centered totally on industrial deployment, Anthropic is pursuing what Amodei calls a “race to the highest” in AI security. The corporate closely invests in mechanistic interpretability analysis, aiming to know the interior workings of AI methods earlier than they grow to be too highly effective to regulate.

This problem has led Anthropic to develop novel approaches like Constitutional AI and character coaching, designed to instill moral conduct and human values in AI methods from the bottom up. These strategies characterize a departure from conventional reinforcement studying strategies, which Amodei suggests could also be inadequate for guaranteeing the protection of competent methods.

Regardless of all of the dangers, Amodei envisions a “compressed twenty first century” the place AI accelerates scientific progress, significantly in biology and medication, probably condensing a long time of development into years. This acceleration may result in breakthroughs in illness therapy, local weather change options, and different vital challenges going through humanity.

Nonetheless, the CEO expressed severe considerations about financial implications, significantly the focus of energy within the arms of some AI firms. “I fear about economics and the focus of energy,” he mentioned, “when issues have gone improper for people, they’ve typically gone improper as a result of people mistreat different people.”

“That’s possibly in some methods much more than the autonomous threat of AI.”

Edited by Sebastian Sinclair

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.